Galway Urban Planning

I’ve spent a lot of time thinking about how urban planning has been done and what could be done to make it better. In many ways the technical challenges aren’t all that big, the main problems are the political challenges that are around making any change to the physical environment or peoples behaviour. Even so given that there’s a lot that should be done to fix some of the issues we have. I think there’s a few issues with Galway that are relatively easy to fix if you could magic away the money and political problems. For example, much better public transport and building dense housing within certain corridors would provide needed housing while also allowing them not to require cars. If you planned a light rail system or bus rapid transport system along an east-west corridor and planned dense housing along it you could alleviate many challenges the city is facing

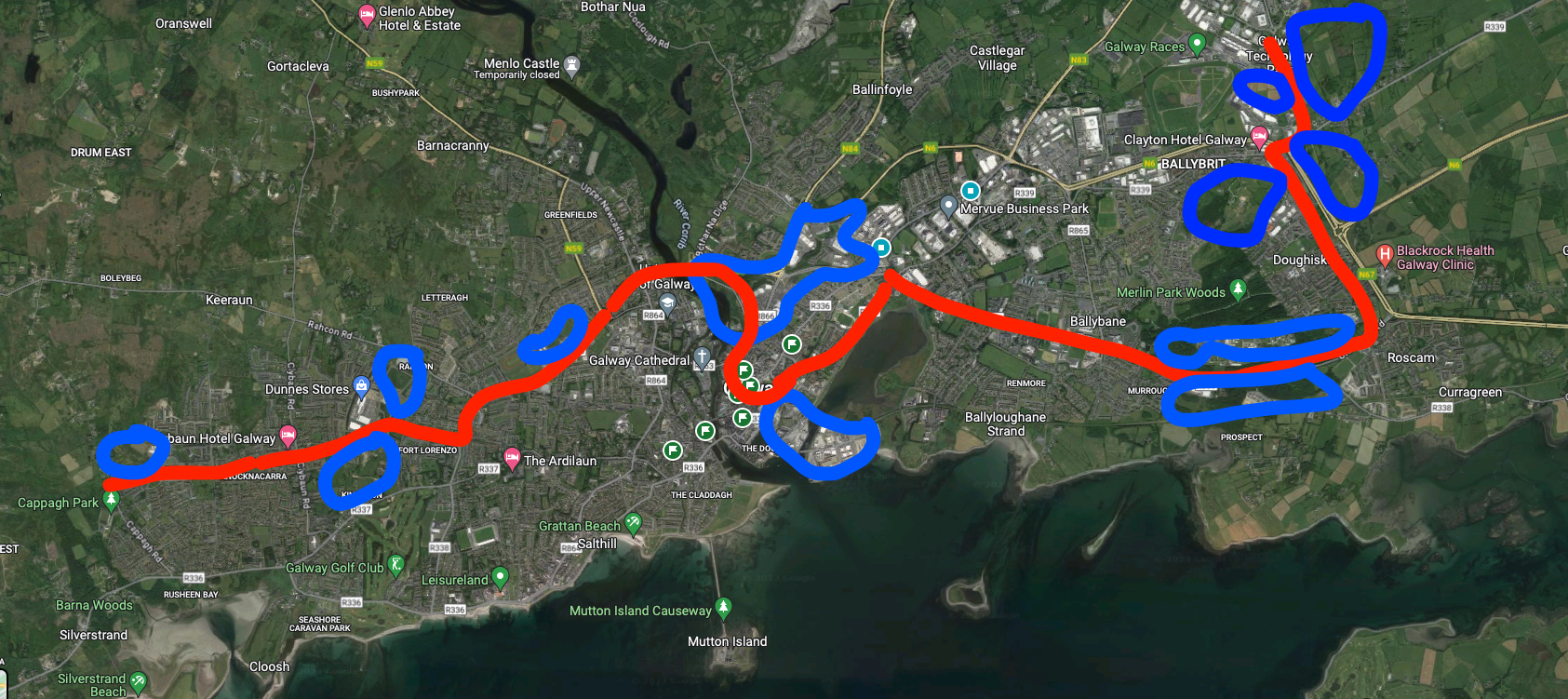

This is the rough idea I had where the red line is the transport corridor and the blue is areas alongside that corridor which are currently underdeveloped. The blue would be a mix of residential, office and commercial spaces with each being their own 5-minute neighbourhood. Currently they are either greenfield spaces or shopping centres where the majority is car parking

Another feature of Galway is the train line which runs to the east of the city. Currently there is one train station which has only a car park around it. There is potential for new stations and developments

Read Full Post...